It’s that time of year again for local government digital teams: The Society of IT Managers (SOCITM) are about to run their tests for their 2014 Better Connected (BC) report and in line with last year they’ve announced what they are going to test.

The fine folk of the #LocalGovDigital are rightly urging people not to get hung up on the report and instead focus on a user centric approach to development. Something I think needs to be shouted from the roof tops of every council building.

The problem is many councils still have the Better Connected rating set as a Key Performance Indicator (KPI) for their website and for them that makes ignoring the report very difficult. If your reputation inside an organisation depends on the results are you going to ignore what they are testing for?

We are top of the league

Setting the Better Connected rating as a KPI is a direct result of local authorities desire to have a performance figure for everything, and also the desire on being able to compare themselves to each other - basically they like league tables.

When local government was getting to grips with all things digital there was very little in the way of measurement, and when Better Connected came along it filled the gap nicely.

Short term run and fix

The report is certainly responsible for a lot of improvements over the last few years. Most people don’t have the time to trawl through the 400+ local government websites to see what’s good and what’s not, but is a dangerous way to measure your site and it can trap you in short term development cycles.

Getting the report in March, racing to implement changes for October then waiting for next March can suck a council into a short term 6 month cycle, always looking to the next set of tests with very little opportunity to look beyond the report and address some of the very real issues they may be facing with their services.

Organisations need to break out of the cycle and start to build long term sustainable strategy that can see them beyond the next report and help them build a platform for real sustainable digital service delivery.

Measure transactions the gov.uk way

For me the most impressive thing that the Government Digital service have delivered the Digital Services Manual, which sets out a methodology for delivering digital services in government. The manual is a must read for anyone delivering digital services in the public sector, It covers everything from how to run an agile project to how to decommission services when they reach end of life.

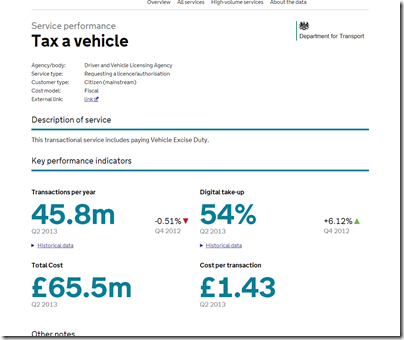

One area in the service manual covers is measurement and I feel this is where all public sector organisations should be looking when it want’s to get a handle on how their website is performing.

The manual recommends looking at

The manual recommends looking at

- Costs per transaction

- user satisfaction

- completion rate

- digital take-up

Getting take-up up and costs down are good indicators of success; while completion rate and satisfaction help you understand where the pain points are for your users.

You can see the results of these measurements for gov.uk through the transaction explorer and the performance platform

Measure contact

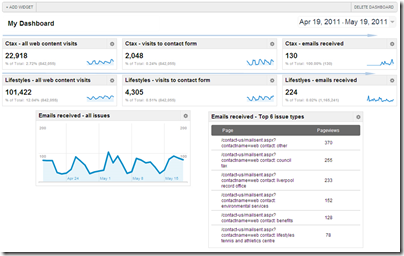

Another measure that you might choose to include is contact rates. the ability to measure of contact rates was built into the design of liverpool.gov.uk – users have to click through to telephone numbers, and addresses – which gives the city the ability to measure contact from any section of page of the site.

Another measure that you might choose to include is contact rates. the ability to measure of contact rates was built into the design of liverpool.gov.uk – users have to click through to telephone numbers, and addresses – which gives the city the ability to measure contact from any section of page of the site.

This in turn allows them to highlight areas of high contact and concentrate resources on those areas of their website.

Chase customers not badges

While SOCITM do a very thorough job of analysing all local government websites and teasing out best practice in the field, pinning you site to the badge and not looking at what your customers want is a dangerous game. Scores can change based on a number of factors, test type, reviewer and many local variables, none of which are dictated by your users.

So if you are using an user centric approach to design, isn’t it right that you should be using user centric measures to see how your site is performing?

Kevin jump is the director of Jumoo, a digital services company that help people do evidence based, customer focused, digital stuff - well. He can rant on about this stuff all day, if you would like to get him to rant at someone in your organisation, why not drop us a line?